Outsourcing AI projects: Who is really liable if the GPT makes a mistake?

A scenario that keeps every AI consultant awake at night:

You develop a Custom GPT for a client. The GPT is supposed to create cost estimates. You deliver clean work, document everything, and hand over the tool.

Three months later: The GPT spits out a wrong price. The end customer accepts. The client makes a loss. And suddenly there is a claim for damages in the room.

Who pays? You as the developer? The client who uses the tool? Or even both?

This question is not theoretical. It is existential for anyone who develops AI tools for others. And the answer is more complicated than most people think.

The inconvenient truth: AI cannot be liable

Let’s start with the obvious: An AI has no legal personality. It cannot be sued, cannot pay, cannot be held responsible. This means that if an AI system causes damage, a person or a company must always be liable.

The only question is: which one?

German law knows various bases for claims that can become relevant in the event of AI damage:

| Basis of claim | Typical constellation | Practical relevance for AI projects |

|---|---|---|

| Contractual liability | Between developer and client | Main track in the event of project damage such as miscalculations |

| Tort liability (§ 823 BGB) | Towards third parties | In the event of infringements of rights, claims for injunctive relief |

| Product liability | For defective products | Primarily in the event of personal injury, property damage and, in some cases, data damage |

| Organizational fault | At the operator | Lack of training, testing, control |

Important: For typical project damage such as loss of profit or miscalculations, contractual liability is usually decisive. Product liability is particularly relevant in the event of personal injury and property damage – pure financial losses are not the core there.

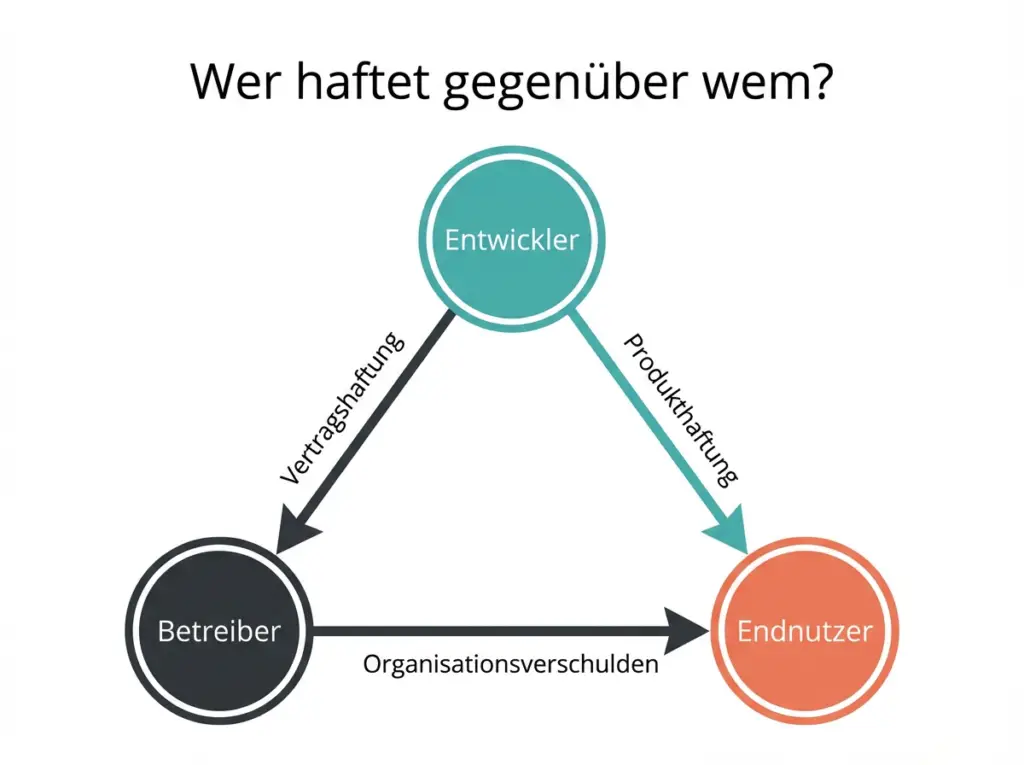

The liability triangle: developer, operator, end user

In a typical AI project, there are at least three parties:

1. The developer (you) You build the Custom GPT, train it, configure the prompts, document the limitations.

2. The operator (your client) The company that uses the GPT either internally or towards customers.

3. The end user The employee who operates the GPT, or the customer who interacts with it.

When is the developer liable?

You as a developer are liable if:

- The AI system is technically faulty: A bug, a logic error, a faulty configuration that leads to incorrect results.

- The documentation is inadequate: You have not clearly communicated what the tool is suitable for and what it is not.

- You have built in security flaws: The system is vulnerable to manipulation, prompt injection or data leaks.

- You have not complied with guaranteed properties: The GPT cannot do what it should be able to do according to the contract.

The new EU Product Liability Directive (Directive 2024/2853, to be implemented by December 2026) explicitly includes software as a “product” and exacerbates the situation in the event of personal injury, property damage and, in some cases, data damage. But: Pure financial losses such as loss of profit from an incorrect cost estimate are typically not the core of the product liability regime. Here, contractual liability remains the main track.

When is the operator liable?

The operator (your client) is liable if:

- He uses the tool without checking it: Anyone who adopts AI-generated results unchecked is acting negligently.

- His employees are not trained: The AI Act (Article 4, in force since February 2, 2025) requires providers and operators to ensure the AI competence of their employees. In practice, this means for the operator: training, guidelines and verifiable controls.

- He uses the tool outside of its intended purpose: A GPT for internal research is suddenly used for binding customer offers.

- He has not set up any human control: Critical decisions are made purely AI-supported.

The crucial question: error, limit or usage problem?

This is where the core of the liability risk lies. With AI systems, there are not just two, but three categories:

1. Real error (developer responsibility) A bug, a wrong logic, faulty training data, a broken configuration. The GPT systematically miscalculates or crashes. This is a classic product defect.

2. System limit (gray area) LLMs have known limitations that are not an “error” in the technical sense: hallucinations, non-determinism (same input, different outputs), context gaps. These limits can be documented, but not completely avoided. Here, expectation management becomes crucial.

3. Usage error (operator responsibility) The user enters incomplete information, ignores warnings, uses the tool outside of its intended purpose, or adopts results without checking.

The problem with AI: The boundaries between these categories are often not clear. Was the wrong cost estimate a system error, a known limit of the model, or did the user prompt badly? This is difficult to prove in the event of a dispute – and that is exactly why documentation is so important.

Practical example:

A roofing company uses an AI-supported cost estimate generator. The employee who operates the tool has never been trained. The GPT delivers a faulty price, the customer accepts, the company makes a loss.

Lawyer’s question: “Was the employee trained in the use of the AI system?”

Answer: “No.”

This is the moment when a technical error becomes a liability case. The damage could have been avoided through appropriate training and testing, the company has violated its duty of care.

An important judgment: The Kiel Regional Court ruled in February 2024 (Az. 6 O 151/23): Even with AI-supported automation, the operator remains responsible. He cannot retreat to only having published third-party data unchecked. The core message: Automation does not protect against responsibility.

Contract for work and services or contract for services? The underestimated setting of the course

For AI projects, this question is not academic, but relevant to liability:

Contract for work and services logic: You owe a specific result. The customer has rights to defects, there is an acceptance logic. If the result does not work, you are liable.

Contract for services logic: You owe careful activity, not a guaranteed result. Liability is more limited.

Why this is important for AI: A GPT that is “supposed to create cost estimates” sounds like a contract for work and services. But can you guarantee an error-free result with an LLM? The answer massively influences your liability.

Recommendation: Formulate contracts in such a way that it is clear: You are delivering a tool with documented limits, not a guarantee of correct results. The duty to check lies with the operator.

Damage limitation in practice

Even if an AI system produces an error, this does not have to lead to the full damage. Smart operators build in safety nets:

Release process: The AI-generated offer is only binding after human release.

Output disclaimer: “Draft, subject to review by specialist personnel.”

Contestation and correction: Depending on the constellation, an obvious price error can legally limit consequences.

For you as a developer: If the client does not accept human control and a binding review process – with the AI outputs being degraded to drafts – this is a project with structural liability stress. Then: Price up, scope down, or say no.

Can you get insurance?

Yes, and you should do so urgently.

IT liability insurance (professional liability)

IT liability is the most important protection for AI developers. Depending on the policy and provider, it can cover:

- Financial losses: Financial losses for the customer due to software errors

- Programming errors: Even non-reproducible errors

- Consulting errors: Incorrect recommendations for AI implementation

- IP risks: Copyright and license infringements (often with sublimits)

- Data protection: GDPR violations (often via separate cyber module)

Costs: Often from a low three-digit amount per year, depending on turnover, coverage amount and deductible. Providers such as ERGO, Hiscox, AXA or NÜRNBERGER offer special IT policies.

Important: Check exactly what your policy covers. ERGO, for example, offers a financial loss liability specifically for IT and EDP service providers, Hiscox advertises an explicit “AI cover” for development and consulting on AI applications. But: Read exclusions and sublimits. Not every IT liability automatically covers all AI risks.

What the insurance does NOT cover

- Intentional breaches of duty: If you knowingly build in errors

- Claims for performance: The insurance does not pay your fee if the customer is dissatisfied

- Contractual penalties and fines: Only damages, no penalties

Coverage amounts

For AI projects, I recommend a minimum coverage amount of 500,000 euros for financial losses. For larger projects with high damage potential, correspondingly more.

How to protect yourself contractually

Insurance makes sense. However, it is better to avoid liability cases from the outset or at least clearly regulate who is responsible for what.

Regulate in the contract

1. Clarify roles

“The contractor acts as developer/provider within the meaning of the AI Act. As operator, the client assumes responsibility for training employees, proper use and verification of results.”

2. Limit intended purpose

“The AI system is intended exclusively for “specific purpose”. Use for other purposes is at the sole risk of the operator.”

3. Stipulate the operator’s duty to check

“The operator undertakes to have all outputs of the AI system checked by expert personnel before use in business-critical applications.”

4. Agree on limitation of liability

“The liability of the contractor is limited to “amount/order value”. This limitation does not apply in the event of intent or gross negligence.”

Attention: Blanket exclusions of liability such as “We are not liable for AI-generated content” are often legally ineffective in GTC.

Document upon handover

- Create operating instructions: What can the tool do, what can’t it do?

- Document limitations: Known limits and sources of error

- Point out training obligation: Draw attention in writing to the fact that the operator must train his employees

- Have handover protocol signed: Customer confirms that he knows the limitations

Possible distribution of liability:

| Scenario | Who is liable? | Basis of claim |

|---|---|---|

| Systematic calculation error in the GPT | Developer | Contractual liability (poor performance) |

| User has used incorrect input data | Operator | Organizational fault |

| GPT has calculated correctly, but user has not checked result | Operator | Violation of the duty to check |

| Known system limit (hallucination), but not documented | Developer | Lack of documentation |

| No training, no documentation, no testing | Both proportionally | Shared responsibility |

In practice: If both sides have made mistakes, a settlement is often sought. The insurance companies negotiate, and in the end each side pays a part.

My recommendation: This is how you minimize your risk

As a developer

- Take out IT liability insurance – At least 500,000 euros coverage for financial losses

- Document cleanly – Every system needs operating instructions with clear limits

- Secure contractually – Regulate roles, intended purpose, duties to check in writing

- Demand proof of training – Have it confirmed before handover that the operator knows his duties

- Do not make assurances that you cannot keep – “The GPT makes no mistakes” is a dangerous statement

What you should NOT do

- Accept projects without insurance – The risk is too high

- Assure everything across the board – Be honest about the limits of AI

- Hand over undocumented – In the event of a dispute, you lack the evidence

- Rely on “the customer will check anyway” – He won’t do it

Special case: You develop, the customer passes on to third parties

It becomes particularly tricky if your client passes the AI tool on to his own customers or partners.

The regulatory background: Article 25 AI Act regulates responsibilities along the AI value chain – but primarily for high-risk AI systems. Many Custom GPTs for cost estimates do not automatically fall into this category according to today’s understanding.

Nevertheless relevant: If the system is classified as high-risk or the customer pushes it in this direction by changing its intended purpose. he can become a provider from a regulatory perspective. This happens, for example, with:

- Placing on the market under its own name or brand

- Significant changes to the system

- Change of intended purpose (e.g. from “internal” to “customer-effective”)

For you as a developer, this means:

- Clarify in the contract whether and how the tool may be passed on

- If so, the client must assume the corresponding obligations

- Document that you have pointed out this constellation

- If you are unsure about the risk class: recommend legal review

The risk is real, but manageable

AI development for customers is not a risk-free business. But with the right protection, the risk is calculable:

- IT liability insurance – Your first line of defense

- Clear contracts – Regulate roles and responsibilities in writing

- Clean documentation – Make limits and restrictions transparent

- Training instructions – Draw the operator’s attention to his duties

The goal is not to exclude every liability. That doesn’t work. The goal is to be able to prove in the event of damage that you have fulfilled your duties of care.

Frequently asked questions about AI liability

As a developer, can I be completely exempt from liability? No. A complete exemption from liability is not possible in the event of intent and gross negligence. However, you can limit liability to specific amounts and clearly define what you are responsible for and what the operator is responsible for.

Is normal professional indemnity insurance sufficient? Mostly not. You need IT liability insurance that covers financial losses. Normal professional indemnity insurance often only covers personal injury and property damage.

How much does IT liability insurance cost? Often from a low three-digit amount per year, depending on turnover, coverage amount and deductible. Compare offers and check exactly what is covered.

Is OpenAI not liable as the provider of the base model? Partially. OpenAI is liable for errors in the base model (GPT-4 etc.). However, if you build a Custom GPT, you are responsible for your configuration, your prompts and your documentation.

Do I have to inform my customers about the training obligation? Not legally required. But it protects you in the event of a dispute if you can prove that the customer was aware of their obligations.

What if the customer has no insurance and cannot pay? Then the damage may remain with you if you are jointly responsible. All the more reason to only work with reputable clients and, when in doubt, demand advance payment.

Are you planning an AI project and want to minimize your liability risk?

In a free initial consultation, we will jointly analyze your setup and develop a hedging strategy.

Post Tags :

Share :

Custom GPTs Enterprise, ChatGPT Enterprise Limitations, Custom GPTs Data Protection, Vendor Lock-in OpenAI, On-Premises AI, Enterprise Search, auraHub Alternative, AI Platform Comparison, Micro Apps vs Custom GPTs, GDPR AI Compliance, AI Rights Management, Company-Wide AI, AI Implementation

More articles on this topic

Individual instructions for AI. How to optimally use ChatGPT and Claude to achieve better results.

AI platform for companies. AI platform with micro apps and enterprise search for structured AI use.

AI Governance thought pragmatically. How to introduce AI guidelines without stifling innovation.

AI agents in medium-sized businesses and trades. How AI agents automate routine tasks and save time.