AI platform for companies:

Why your employees shouldn't be writing prompts

2026 will be the year that reveals which companies are truly using AI productively and which are just talking about it. The technology has been around for a long time: ChatGPT, Claude, Gemini, Mistral, and numerous other models are ready. The challenge lies elsewhere. Most employees do not use the AI tools provided, or only sporadically. The reason is surprisingly simple: Writing prompts is a skill that hardly anyone has. auraHub solves this problem with a different approach.

The prompt dilemma: Why AI tools remain unused

ChatGPT, Claude, Gemini und Mistral sind beeindruckend. In theory. But when employees are faced with a blank input field, one of two things often happens: Either they type in something vague and get useless results. Or they don’t even use the tool because the effort involved seems too great.

The problem is not the technology. It is the expectation that normal employees will suddenly become prompt experts. An accountant should know how to get a language model to create a precise summary. A salesperson should spontaneously formulate the right instruction to generate a convincing email.

That’s like expecting every employee to master SQL just because the company has a database.

The reality in many companies: There are licenses, there are training courses, there may even be an AI strategy. But in everyday life, most employees continue to open Word instead of ChatGPT. Not out of unwillingness, but out of uncertainty.

What are Micro Apps? Definition and Functionality

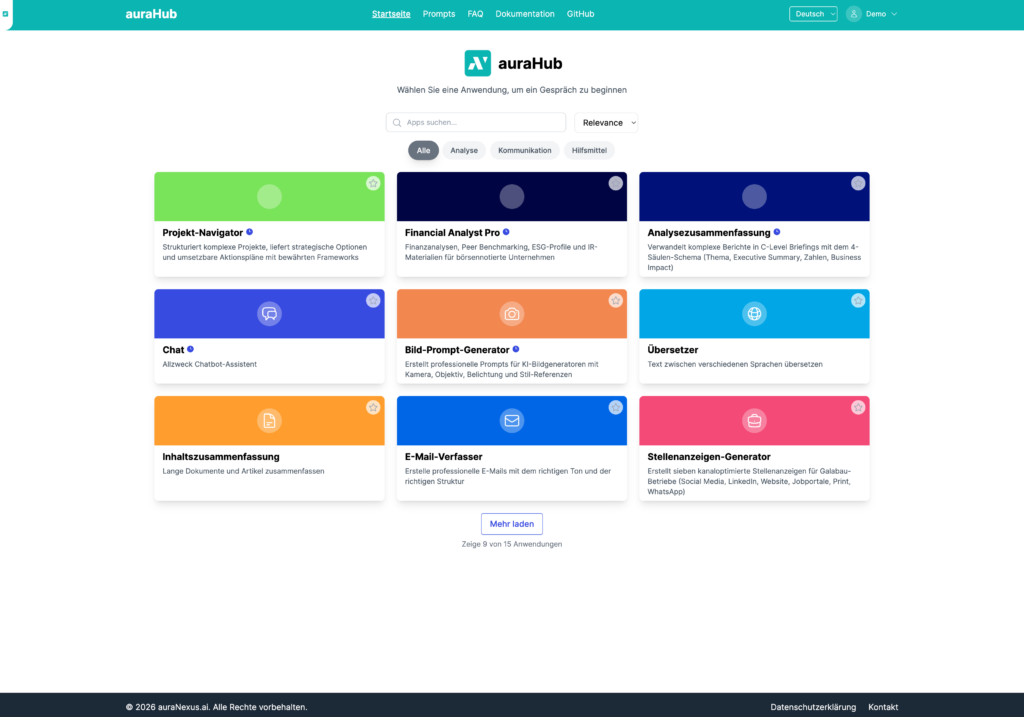

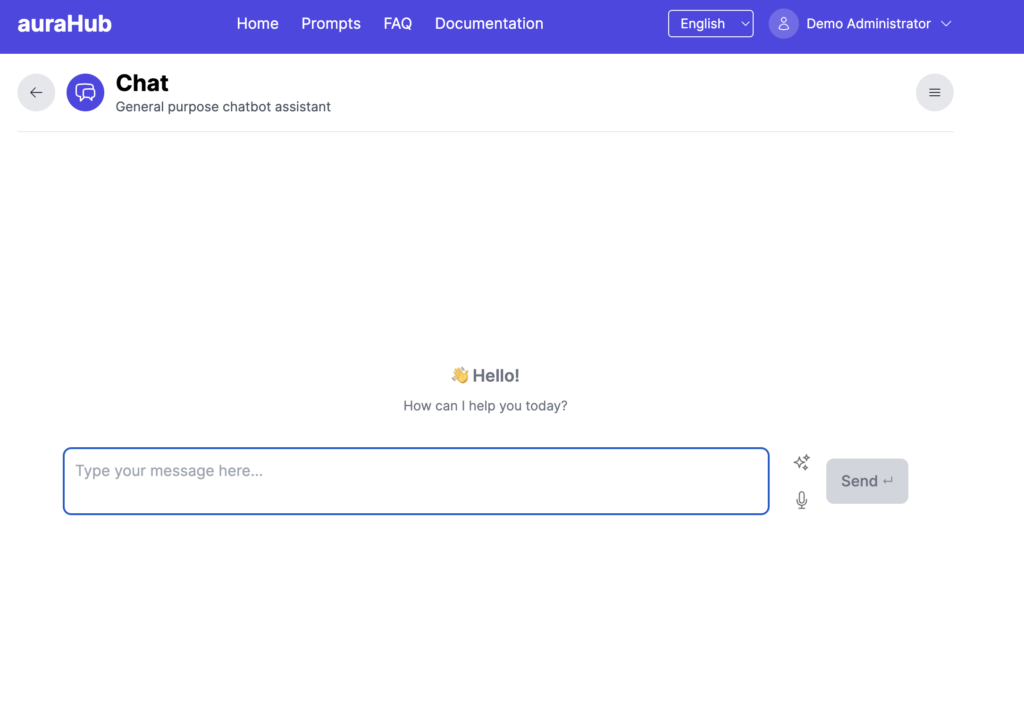

Definition: Micro Apps are specialized mini-applications that solve exactly one task. Each Micro App has a clearly defined input form. Users fill out fields, click a button and receive a result. The language model works in the background, but the user does not have to write any prompts.

The difference to the classic chatbot interface: Instead of a blank text field, the user sees structured input fields. Instead of an open question, they answer targeted questions. The quality of the results no longer depends on the user’s skills, but on the quality of the prompts stored. And these are created once by experts, not improvised daily by laypersons.

Examples of Micro Apps in corporate use

- Social Media Assistant: Enter topic, select tonality (informative, emotional, provocative), select platform (LinkedIn, Instagram, X). The app generates a finished post that fits the corporate language. Time savings per post: approximately 15 minutes.

- Press Release Generator: Enter core message, management quotes, and facts. The app structures everything into the classic press release format with headline, lead, body text, and boilerplate.

- Document Compressor: Upload PDF, specify desired length (short version, management summary, detailed analysis). The app delivers a structured summary with the most important points and recommendations for action.

- Email Optimizer: Insert draft, define goal (inform, convince, de-escalate, arrange appointment). The app revises tonality, structure, and call-to-action accordingly.

- Meeting Minutes Assistant: Upload audio file or notes. The app creates a structured log with participants, topics discussed, resolutions, and open points.

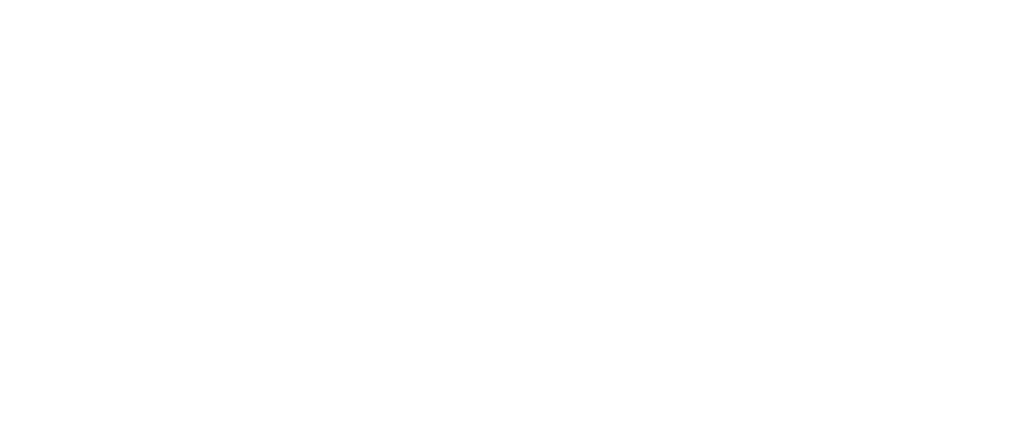

auraHub: The AI workplace platform for companies

We have implemented this idea in auraHub. auraHub is an AI platform for generative AI that we use at auraNexus.ai as part of our AI consulting and configure individually for customers.

The platform already includes numerous pre-built Micro Apps: Email Generator, Translator, Meeting Assistant, HR Assistant, Memo Generator, and more. Each app can be adapted to the requirements of the respective company. New apps can be created without programming knowledge by defining input fields and storing prompts.

Technical specifications of auraHub

- Model-independent: Supports all common language models, including OpenAI, Claude, Gemini, and Mistral. New models are continuously integrated.

- Model combination: A different model can be stored for each Micro App. Mistral for fast translations, Claude for complex analyses, OpenAI for creative texts.

- Deployment options: On-premises in your own infrastructure or controlled cloud environment

- Included Apps: Email Generator, Translator, Meeting Assistant, HR Assistant, Memo Generator, Web Chat, and more

- Expandable: New Micro Apps can be created without programming knowledge

- White-label capable: Fully adaptable to corporate design

The knowledge layer: Why enterprise search is not Google

Micro Apps for creative tasks are a start. But the real strength unfolds when the AI accesses company-owned data. And this is where it gets technically challenging.

Imagine an employee asks: “What did we communicate about sustainability last year?” Without access to internal data, no language model can answer that, no matter how good it is. The answer lies in SharePoint documents, in emails, in Confluence pages, on the file server. Distributed across dozens of systems.

The problem with simple search

The obvious solution would be: Give the language model access to all documents. But this is exactly where most approaches fail. A simple full-text search finds documents that contain certain words. But it doesn’t understand what it’s about.

An example: You are looking for “vacation policy”. The simple search finds all documents in which this word occurs. But what about documents that talk about “vacation planning”, “absence management” or “time off”? A simple search will not find them, even though they are thematically relevant.

Even more problematic: Who is allowed to see what? In a company, not everyone has access to all documents. Personnel files, contract drafts, and strategic planning are subject to clear access rights. A simple search ignores this. A professional enterprise search does not.

What makes a professional enterprise search

A real Enterprise Search is much more than a full-text search. It combines several technologies to reliably find relevant information:

Semantic search and vector search: Modern systems understand the meaning of requests, not just individual words. If someone asks “How do I apply for vacation?”, the system also finds documents about “absence request” or “time off” because it recognizes the semantic context. The vector search converts texts into mathematical representations and thus finds passages with similar content, even if they use completely different words.

Linguistic analysis: The system recognizes word stems, synonyms, and linguistic variants. “Application”, “apply”, “application” are understood as belonging together. This is particularly important for German-language content with its composites and inflections.

Connectors to all data sources: Company knowledge is stored in SharePoint, Confluence, file servers, email systems, databases, wikis, and numerous other systems. A professional solution comes with ready-made connectors. More than 80 in number to connect and keep all these sources synchronized.

Real-time rights verification: Each user only sees what they are authorized to see. The search adopts the access rights from the source systems and checks them with every request. A sales representative sees different results than an HR manager, even though both ask the same question.

Document processing: PDFs, Word documents, PowerPoints, Excel spreadsheets, and scanned documents. The system must understand all these formats and make them searchable. This also includes extracting text from images (OCR) and analyzing table structures.

RAG: How search and AI work together

What is RAG? RAG stands for Retrieval Augmented Generation. This method first searches for relevant documents or passages from the company’s inventory (Retrieval). The language model then generates an answer based on these documents (Generation). The model does not invent anything, but answers exclusively based on existing content.

The crucial point: The quality of the AI response depends directly on the quality of the search. If the search delivers the wrong documents, even the best language model cannot give a good answer. That’s why a professional enterprise search is so important as a basis.

Why this delivers better results

- Fewer hallucinations: The model only responds based on existing documents. It cannot invent anything because it only gets what the search has found as a source.

- Always up-to-date: Information comes directly from the live data stock. Unlike training data, which was frozen at a certain point in time, the search reflects the current status.

- Rights-checked: Each user only receives answers based on documents for which they are authorized. Confidential information remains protected.

- Traceable: The sources are provided for each answer. Users can check where the information comes from and open the original document if necessary.

- Company-specific: The answers take into account internal terms, abbreviations, product names, and processes. The model answers in the context of the company, not with generic knowledge.

Integration in auraHub

In auraHub, this knowledge layer can be connected as an additional component. The Micro Apps can then access not only general knowledge, but also company data.

An example: The Micro App “Press Release Generator” not only asks for core message and quotes, but can automatically check how similar topics have been communicated in the past. Or the app “Email Optimizer” knows the internal language regulations and templates.

However, the connection requires additional effort: It’s about connectors to data sources, rights concepts, indexing, and quality assurance. This is a separate project, not an aside. But it is the step that makes AI truly productive in the company.

GDPR and AI Act: Meeting compliance requirements

Data protection is not a marginal issue. Many companies hesitate to use AI because they do not know what happens to their data. This is a crucial criterion, especially in regulated industries such as insurance, banking, or public administration.

How auraHub addresses compliance requirements

- On-Premises operation: The platform can run completely in its own IT infrastructure. No data leaves the company network.

- Controlled cloud: Alternatively, cloud operation is possible, in which it is clearly defined which data flows where.

- Model selection: Companies decide for themselves which language models they use. Whether OpenAI, Claude, Gemini, Mistral, or local models, the decision lies with the company.

- AI Act conformity: The solution meets the requirements of the European AI Act for AI applications with low risk.

Outlook 2026: What will change

2026 begins with a clear realization: The experimentation phase is over. Companies that have only tested AI so far are faced with the decision of whether and how to go into productive use.

Three developments that will shape this year

- From individual solutions to the platform: Many companies experimented with various AI tools in 2024 and 2025. Now it is being consolidated. Instead of five different applications for five tasks, a platform is needed that bundles everything. auraHub is designed exactly for this.

- The AI Act becomes reality: The European AI Regulation comes into force in further parts. Companies must document which AI systems they use and how. Solutions that are designed for compliance from the start have a clear advantage.

- Multimodal AI in everyday business: The next generation of language models processes text, image, audio, and video simultaneously. This opens up new use cases: image briefings, video summaries, voice input for Micro Apps. auraHub is prepared for this and supports the integration of new models without platform changes.

For us at auraNexus.ai, this means: We are continuously expanding auraHub. New Micro Apps, improved connectors for the knowledge layer, and support for the latest models are on the roadmap. At the same time, we support companies in making the step from AI play to a real productivity tool.

How to get started with auraHub: The Proof of Concept

At auraNexus.ai, we offer the auraHub as part of our AI consulting. The entry typically takes place via a Proof of Concept (PoC) with a clearly defined scope.What a PoC includes

- Time frame: 4 weeks

- Technical setup: Dedicated test environment with access for test users

- Micro Apps: 3–5 Use Cases such as Social Media, press releases, document summarization

- Model connection: Integration of Claude, OpenAI, Mistral, or other models incl. API costs

- Support: Professional support, feedback collection, joint evaluation

The goal of the PoC is a reliable assessment: Does the architecture work? How good are the results? Which Micro Apps have the greatest added value for your company?

Important: The connection of a knowledge layer with professional enterprise search is not part of the first PoC. This requires additional person-days for data source connection, rights concepts, and quality assurance. However, it can be planned as the next step as soon as the Micro Apps work.

Frequently asked questions about auraHub

What does auraHub cost?

The costs are composed of: Setup and configuration by auraNexus.ai, ongoing support (optional), as well as use of the language models (API costs for cloud models or infrastructure costs for on-premises operation). A Proof of Concept provides clarity about the concrete effort.

Which language models are supported?

The auraHub supports all common language models: OpenAI, Anthropic, Google, Mistral, as well as local models such as Llama. Several models can be used in parallel, each Micro App can use a different model.

Is auraHub GDPR-compliant?

Yes, the auraHub can be operated completely on-premises, so that no data leaves the company network. When using the cloud, the company decides which models and data centers are used. The solution is compatible with GDPR and the European AI Act.

Do employees need prompt knowledge?

No. That is the core idea of auraHub. Employees use preconfigured input masks instead of free text fields. The prompts are created once by experts and stored in the system. Users fill out fields and receive results.

How quickly is auraHub ready for use?

A Proof of Concept with initial Micro Apps can be implemented in 4 weeks. For a productive rollout with a knowledge layer (enterprise search), 2–3 months should be planned, depending on the number and complexity of the data sources to be connected.

What is the difference between auraHub and ChatGPT Enterprise?

ChatGPT Enterprise offers a chat interface with security and admin functions. The auraHub goes one step further: Instead of an empty text field, it offers specialized Micro Apps with input masks. In addition, the auraHub is model-independent and can also be operated with Claude, Gemini, Mistral, or local models. And the optional knowledge layer enables access to company data with a professional search architecture.

Ready for the next step

If you want to know whether the auraHub works for your company, please contact us. A proof of concept provides clarity in four weeks.

Post Tags :

Share :

AI platform company, Micro Apps AI, Enterprise Search, auraHub, Generative AI Platform, AI without prompt knowledge, RAG Retrieval Augmented Generation, Enterprise Search AI, AI work platform, Vector Search Company, AI Document Processing, Language Models Company, AI Integration Systems

More articles on this topic

Individual instructions for AI. How to optimally use ChatGPT and Claude to achieve better results.

AI Governance thought pragmatically. How to introduce AI guidelines without stifling innovation.

AI in the skilled crafts. Practical examples of AI use in skilled crafts businesses and how to get started.

AI agents in medium-sized businesses and trades. How AI agents automate routine tasks and save time.