A pragmatic approach to AI governance:

As much structure as necessary, as little as possible

Too few rules create risk. Too many rules kill usage.

The Dilemma of AI Guidelines

36% of German companies are already using AI, almost twice as many as in the previous year. However, according to Bitkom, 53% see legal hurdles as the biggest obstacle, and 76% complain about uncertainty due to legal ambiguities. This gap between use and control is the biggest unsolved problem of the digital transformation in Germany.

The figures become even clearer: According to KPMG, 69% of German companies have an AI strategy, but only 26% have established a company-wide Trusted AI Governance. And according to a CyberArk study, 66% of German companies do not have complete control over unauthorized AI tools.

Most companies are stuck in a dilemma. Either they have no rules and risk data leaks, compliance violations and reputational damage. Or they have rules that are so complicated that nobody follows them.

Why classic governance models fail with AI

Classic IT Governance works according to a proven pattern: A central team defines rules, releases technologies and monitors usage. With AI, this model fails in three areas.

The speed is not right. While IT Governance approves over months, employees have long been experimenting with new AI tools. According to the Zendesk CX Trends Report, 49% of service employees are already using external generative AI tools, compared to only 17% in the previous year. Usage has almost tripled. And according to Bitkom, 10% of employed people use AI specifically without the knowledge of their employer, a doubling within a year.

The control does not take effect. According to the KPMG/University of Melbourne study, 57% of employees worldwide secretly use AI tools. In German authorities, the problem is particularly acute: A Microsoft/Civey study shows that 45% of employees at the federal level use unapproved AI tools. These are not malicious actors, but people who want to get their work done.

The responsibility is unclear. AI systems do not make decisions, they provide recommendations. But who is responsible if the recommendation is wrong? The employee who adopted it? The IT department that released the tool? The specialist department that defined the use case? According to Bitkom, only one-fifth of German employees have received AI training so far, seven out of ten have never received a training offer.

The consequence is measurable: According to CyberArk, 41% of German companies actively use AI tools that have not been officially approved. At the same time, 94% are already using AI and large language models. The gap between usage and governance is growing.

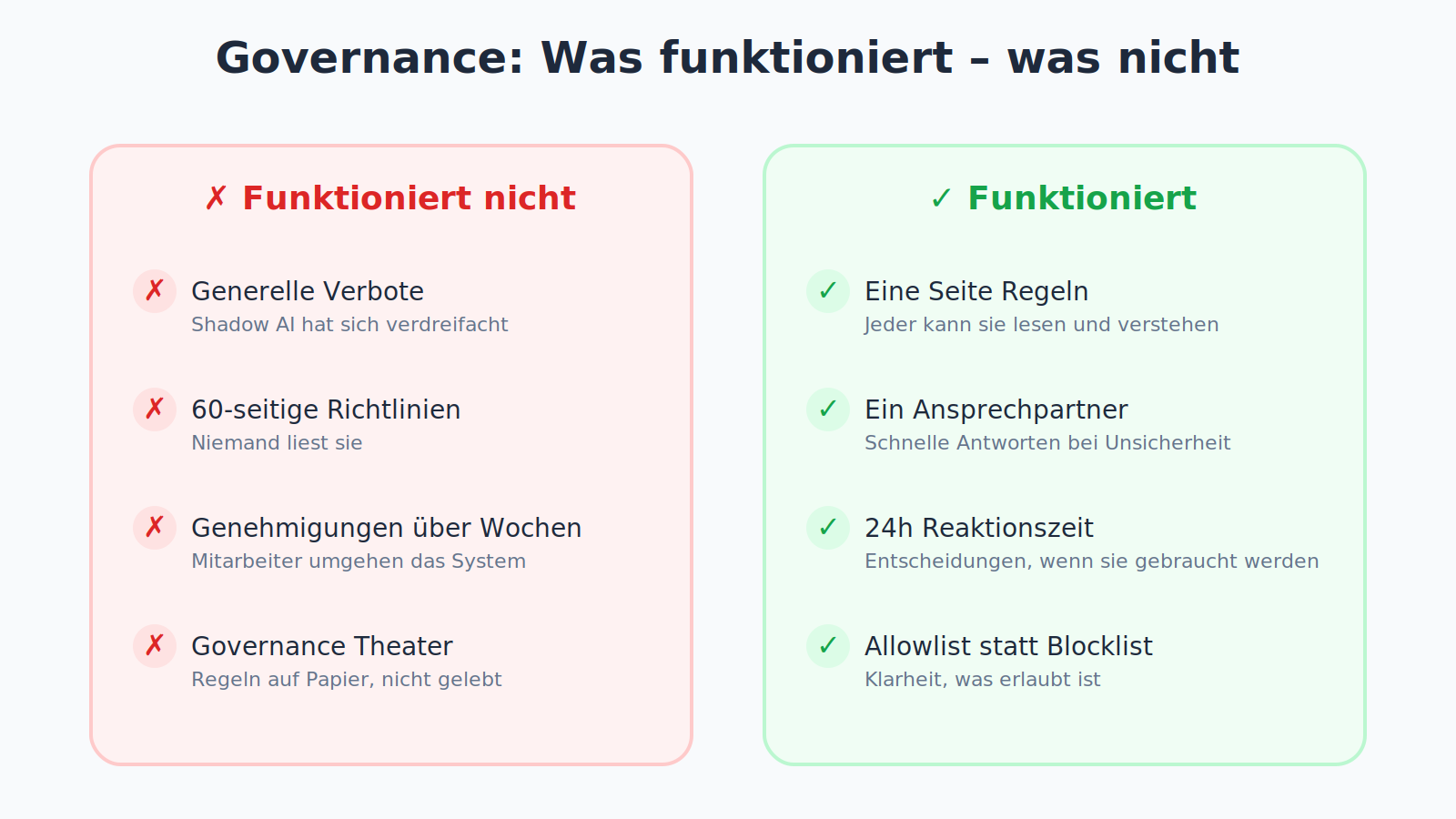

The most common exaggerations in AI guidelines

The reaction of many companies to these risks is more regulation. But that’s exactly what exacerbates the problem.

Exaggeration 1: General Prohibitions. After initial data protection incidents, many German corporations reacted with blanket AI bans. The result? Employees resort to private accounts. The Zendesk CX Trends Report shows: Shadow AI usage has almost tripled from 17% to 49%. Matthias Göhler, CTO EMEA at Zendesk, warns: “It endangers data security when confidential customer information gets into unauthorized systems.”

Exaggeration 2: Legal Perfection. Some AI guidelines read like legal texts: comprehensive, watertight, incomprehensible. According to Zendesk, 65% of CX executives see more AI transparency as necessary, and 83% name data protection and secure AI implementation as top priorities. But the complexity of the requirements overwhelms many companies.

Exaggeration 3: Governance Theater. According to Bitkom, only 23% of German companies have established rules for AI use (previous year: 15%). But paper does not change behavior. At the same time, shadow AI is spreading: In 8% of companies it is already widespread (previous year: 4%), in another 17% there are individual cases.

Exaggeration 4: Too many approval levels. If every AI use case has to go through five departments, one of two things happens. Either the approval takes so long that the project becomes irrelevant. Or the employees bypass the system completely. In Germany, the economy is therefore demanding clear consequences: According to Bitkom, 91% of industrial companies say that politics should not stifle AI innovations through over-regulation. And 46% of companies are calling for a reform of the EU AI Act.

The 5 Governance Building Blocks with Real Benefits

Effective AI Governance is not a set of rules, but a manageable framework. The concept of “Minimum Viable Governance” describes what actually works: essential controls that enable rapid innovation without ignoring risks.

Five building blocks form the foundation.

A recent study by the Cloud Security Alliance from December 2025 confirms: Companies with mature governance are almost twice as likely to be early adopters of Agentic AI (46%) as those without clear guidelines (12%). Governance does not slow down, it accelerates.

1. Permitted Use Cases

Instead of forbidding everything and then approving exceptions, the opposite works better: A clear list of permitted use cases with defined limits.

What belongs to it:

- Which AI tools are approved for which tasks

- Which data types may be processed (public, internal, confidential, personal)

- Which use cases are explicitly excluded (automated decisions about people, customer communication without identification)

Why this works: Employees know what they are allowed to do without asking beforehand. This reduces both uncertainty and shadow AI.

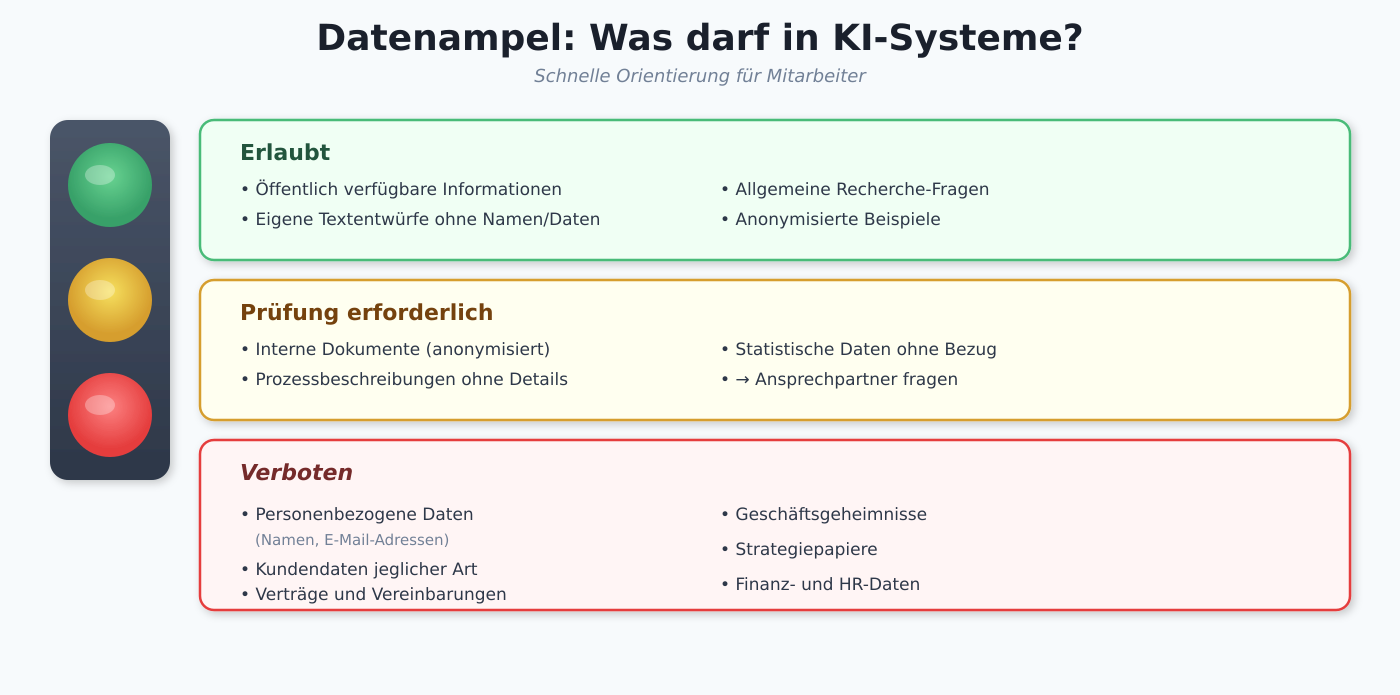

2. Data Rules

Most AI risks arise from data: wrong data in, confidential data out, personal data in foreign systems. In Germany, the GDPR is an additional requirement.

What belongs to it:

- Classification: Which data may be in external AI systems (no personal data, no trade secrets, no customer data)

- Anonymization: How must data be prepared before it flows into AI systems

- Storage: Where are AI-generated results stored, who has access

Why this works: Clear data rules prevent 80% of typical AI risks without restricting usage. German companies have an advantage here: The GDPR experience can be directly transferred to AI Governance.

3. Quality Checks

AI systems hallucinate. They invent facts, sources and statistics. Every output needs a plausibility check, but not every output needs the same check.

What belongs to it:

- Risk levels: Internal brainstorming note vs. customer presentation vs. contract draft

- Test depth: Apparent plausibility vs. source check vs. specialist approval

- Responsibility: Who checks, who releases, who is liable

Why this works: Graduated testing concentrates resources where errors become expensive.

4. Escalation Paths

Not every situation is covered by rules. There needs to be a clear path for borderline cases and new applications.

What belongs to it:

- Contact person: Who decides on new use cases, who in case of uncertainty

- Response time: How quickly must a decision be made (24 hours, not 4 weeks)

- Documentation: How are decisions recorded so that they serve as precedents

Why this works: Rapid escalation prevents employees from either doing nothing or acting on their own when uncertain.

5. Review Cycles

AI is developing rapidly. What applies today may be obsolete tomorrow. Governance must evolve with it.

What belongs to it:

- Rhythm: Quarterly review of the guidelines, not annually

- Feedback: How do employees report problems and suggestions for improvement

- Adjustment: Who can change rules, how quickly

Why this works: Regular updates keep governance relevant and show that feedback is taken seriously.

Practical example: Governance without bureaucracy

A medium-sized German company with 200 employees has implemented AI Governance in four weeks. Not perfect, but effective.

Week 1: Inventory. Which AI tools are already being used? Which data flows where? The result was sobering: 12 different AI tools in use, 9 of them without IT knowledge.

Week 2: Consolidation. Three tools were defined as standard: one for text, one for images, one for data analysis. All with company licenses, all GDPR compliant, all with Single Sign On.

Week 3: Rules of the Game. One page with the most important rules: What may be in (public information, own text drafts), what not (customer data, contracts, strategy papers). Who checks (the one who publishes). Who to ask in case of uncertainty (one person, not a committee).

Week 4: Rollout. 90 minutes of training for everyone, recorded for new employees. Not compliance training, but practical application: This is how you use the tools, this is how you avoid mistakes, this is how you get answers quickly.

The result after three months: Shadow AI usage reduced from 45% to below 10%. No data protection incidents. And, more importantly: Employees use AI more than before because the uncertainty is gone.

Getting Started with Minimal Governance

If you are starting from scratch, you do not need a master plan. These five steps are enough to start:

Step 1: Inventory. What is already being used? Not by questioning, but by observation. IT logs, browser histories, conversations with teams.

Step 2: Focusing. Define the three most important use cases. Not the riskiest, but the most frequent. That’s where the biggest leverage is created.

Step 3: One Tool, One Standard. One approved tool for each use case. With license, with support, with clear rules.

Step 4: One Page of Rules. Everything employees need to know on one page. Nobody reads more.

Step 5: One Contact Person. One person who answers questions within 24 hours. Not a committee, not a ticket system.

The rest comes later. Governance grows with usage. Those who regulate too much too early stifle innovation. Those who start too late lose control.

Finding the Right Balance

Effective AI Governance is not a question of more or fewer rules. It is a question of the right rules. Rules that provide guidance in everyday life instead of slowing down. Rules that empower people instead of controlling them. Rules that adapt instead of petrifying.

The companies that use AI successfully have understood: Governance is not an obstacle to innovation. It is the prerequisite for innovation to scale.

Frequently asked questions

What is Shadow AI and why is it a problem? Shadow AI refers to the use of AI tools without the knowledge or approval of the IT department. The problem: According to Zendesk 2025, 49% of service employees are already using external GenAI tools, almost three times as many as in the previous year. According to Microsoft/Civey, it is even 45% in German federal authorities. Companies lose control over their information and risk GDPR violations.

Does every company need an AI Governance? Yes, as soon as employees use AI. And they already do: According to CyberArk, 94% of German companies use AI, but 66% have no control over unauthorized tools. The question is not whether governance, but how much. For a 20-person team, one page of rules is enough. For a corporation, it needs more structure.

How much Governance is too much? It is too much when employees circumvent the rules because they are too complicated or too slow. The rule of thumb: If the approval takes longer than the task itself, something is wrong.

What does the introduction of AI Governance cost? For the start: little. The biggest costs are time, not money. An inventory of existing usage, one page of rules, a contact person, a training. This can be implemented in two to four weeks.

How do I measure the success of AI Governance? Three key figures: Proportion of employees who use approved tools (target: over 80%). Number of security incidents caused by AI (target: zero). Time from request to approval for new use cases (target: under 48 hours).

Do I have to comply with the EU AI Act? Yes. Since February 2025, the obligation to have AI competence applies: Companies must ensure that employees who work with AI are sufficiently trained. According to Bitkom, however, only 20% of German employees have received AI training. The EU announced simplifications in November 2025 with the Digital Omnibus: Deadlines for High-Risk AI will be postponed to the end of 2027. According to Bitkom, 46% of German companies are calling for a reform of the EU AI Act, a signal that the economy also prefers pragmatic governance.

Would you like to introduce AI Governance without slowing down your teams?

Together we develop a framework that suits your company.

Post Tags :

Share :

AI Governance, Shadow AI, Shadow AI, AI Guidelines, EU AI Act, AI Compliance, AI Policy, AI Medium-Sized Businesses, Generative AI, AI Training, GDPR AI, AI Strategy, Minimum Viable Governance

More articles on this topic

AI doesn’t save time. It shifts work. How AI transforms the nature of work and why governance is important.

AI is changing roles faster than job descriptions. How job profiles are changing through AI and what that means for governance.

AI in the skilled crafts. Practical examples of AI governance in craft businesses.

Custom instructions for AI. How to ensure your employees make optimal use of AI as part of your governance.